1. Nvidia (NVDA) – Earnings Review

a) Results vs. Expectations

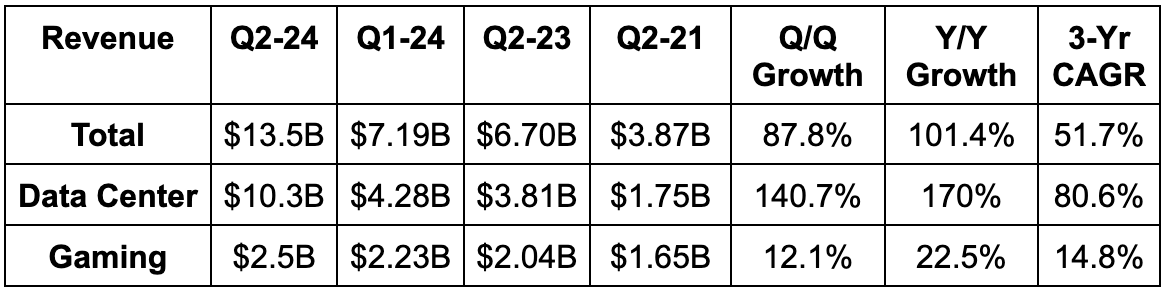

- Beat revenue estimates by 22% & beat guidance by 22.7%.

- Beat EBIT estimates by 31% & beat guidance by 34.1%.

- Beat EPS estimates by $0.39 & beat guidance by $0.44.

b) Next Quarter Guidance

- Beat revenue estimates by 29%.

- Beat EBIT estimates by 39.7%.

- It has “strong demand visibility” well into calendar 2024.

c) Balance Sheet

- $5.8 billion in cash & equivalents.

- $9.7 billion in total debt with $1.2 billion current (due within 12 months).

- Share count fell 1% Y/Y as it bought back $3.1 billion in stock vs. $3.3 billion Y/Y.

- Announced a new $25 billion buyback program to bring its total current buyback capacity to $29 billion.

- Dividends are flat Y/Y.

d) Call & Release Highlights

The Generative AI Wave:

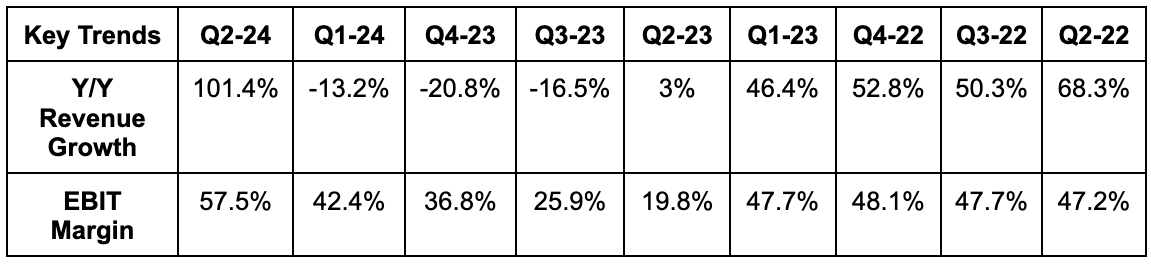

The transformation of general compute usage within data centers to accelerated compute and AI is in full swing; Nvidia is best positioned to capitalize. This newer approach effectively frees up a large chunk of central processing unit (CPU) needs to make these CPUs more efficient and slightly less needed. That means cost savings. Furthermore, Cloud service providers, large internet players and enterprises from all industries are racing to rip and replace their old infrastructure with Nvidia’s Hopper 100 (H100) graphics processing units (GPUs). This is thanks to the vast cost and efficiency edges it brings to the table. These edges allow Amazon to cut costs while enhancing service quality and diminishing carbon footprint and allow Meta’s Reels algorithm to power more than 20% growth in engagement.

Nvidia’s lead in efficiently training models and use cases is simply unmatched… and nobody comes close. Its inference technology is also considered in the lead, but the gap is less drastic vs. competition. Training allows models to more precisely query and provide information via better algorithm seasoning. Inference allows these trained models to predict and generate new information based on learning from old patterns. Training happens at the beginning… then inference takes over. Both are vital to effectively extract value from AI apps and large language models (LLMs).

Along the same AI theme, its networking revenue also doubled Y/Y thanks to its own “InfiniBand” platform for high performance computing. This product doubles performance vs. legacy ethernet alternatives with that edge being worth “hundreds of millions” to its large clients. If you’re noticing a theme of Nvidia delivering both cost and performance edges, you’re right. The platform is the “only infrastructure within networking that can scale to hundreds of thousands of GPUs making it the “network of choice” in AI.

Supply:

Nvidia’s H100 chips are supply constrained. It praised its partners for “exceptional capacity ramps” but it’s still not able to meet 100% of its demand. Incredible to think about considering how mammoth of a quarter this still was. The company sees supply constraints easing throughout the year and into 2024 to allow it to better meet customer needs. Cycle times are shrinking and capacity partners are being added.

New Products:

- Its new “enterprise ready servers” with its L40S GPU chip is expected to ease some of the supply scarcity for its accelerated computing demand.

- Its next generation Grace Hopper 200 (GH200) Super Chip is in full production. This is 90% Nvidia GPUs and 10% ARM CPUs. The next generation of this next-gen chip is set to be available next spring. It’s always iterating.

- Its DGX (the name of its full stack platform) GH200 supercomputer for AI models will be available in the next 5 months. This allows for the connection of 256 GH200 chips to be operated as a singular unit vs. previously only being able to connect 8 at a time. This drives cost and performance efficiencies.

China:

The team does not see export restrictions on its data center GPUs to China as immediately impacting results. This is thanks to the sheer magnitude of current global demand. Still, if further restrictions are actually implemented, that would shrink the opportunity to a certain extent and place a lower ceiling on where generative AI and accelerated computing can take Nvidia.