Nvidia designs semiconductors for data center, gaming and other use cases. It’s unanimously considered the technology leader in chips meant for accelerated compute and Generative AI (GenAI) use cases. The following are important acronyms and definitions to know for this company:

- GPU: Graphics Processing Unit. This is an electronic circuit used to process visual information and data.

- CPU: Central Processing Unit. This is a different type of electronic circuit that carries out tasks/assignments and data processing from applications. Teachers will often call this the “computer’s brain.”

- DGX: Nvidia’s full-stack platform combining its chipsets and software services.

- Hopper: Nvidia’s modern GPU architecture designed for accelerated compute and GenAI. Key piece of the DGX platform.

- H100: Its Hopper 100 Chip. (H200 is Hopper 200)

- L40S: Another, more barebones GPU chipset based on Ada Lovelace architecture. This works best for less complex needs.

- Ampere: The GPU architecture that Hopper replaces for a 16x performance boost.

- Grace: Nvidia’s new CPU architecture designed for accelerated compute and GenAI. Key piece of the DGX platform.

- GH200: Its Grace Hopper 200 Superchip with Nvidia GPUs and ARM Holdings tech.

- InfiniBand: Interconnectivity tech providing an ultra-low latency computing network.

- NeMo: Guided step-functions to build granular GenAI models for client-specific needs. It’s a standardized environment for model creation.

- Cuda: Nvidia-designed computing and program-writing platform purpose-built for Nvidia GPUs. Cuda helps power things like Nvidia Inference Microservices (NIM), which guide the deployment of GenAI models (after NeMo helps build them).

- NIM helps “run Cuda everywhere” — in both on-premise and hosted cloud environments.

- GenAI Model Training: One of two key layers to model development. This seasons a model by feeding it specific data.

- GenAI Model Inference: The second key layer to model development. This pushes trained models to create new insights and uncover new, related patterns. It connects data dots that we didn’t realize were related. Training comes first. Inference comes second… third… fourth etc.

a. Demand

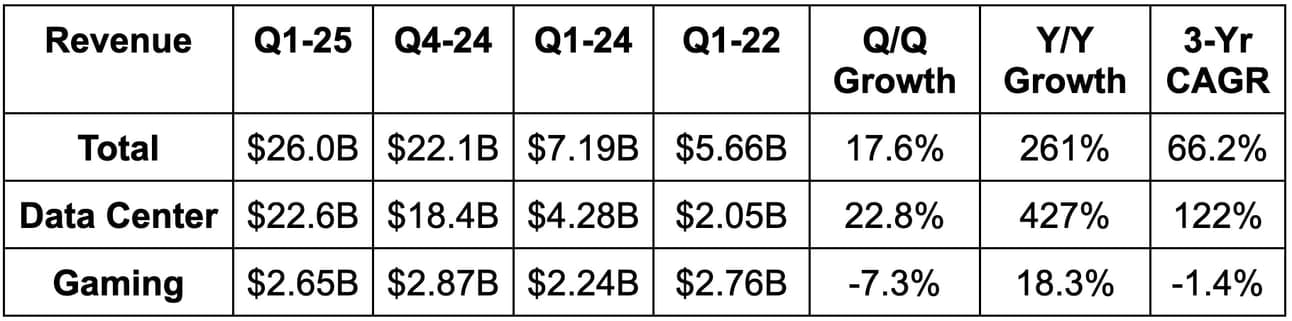

- Beat revenue estimates by 5.5% & beat guidance by 8.3%.

- Beat data center revenue estimates by 6.6%.

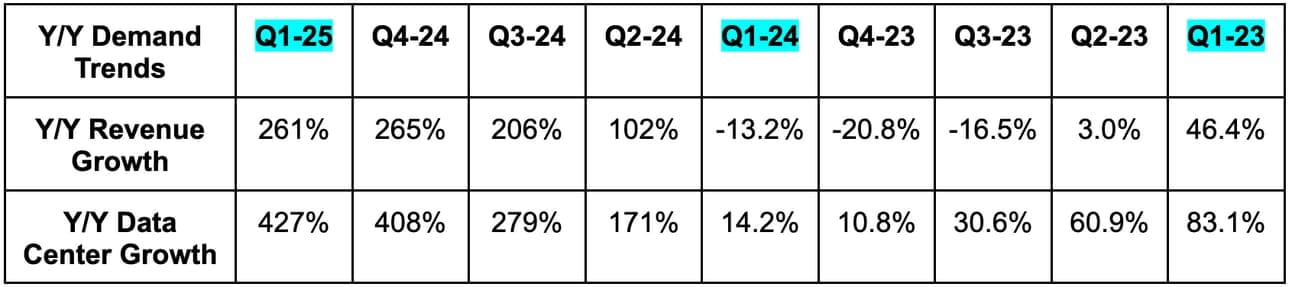

Nvidia’s 3-year revenue compounded annual growth rate (CAGR) continues to accelerate. 66.2% this quarter compares to 64% Q/Q and 56% 2 quarters ago.

b. Profits & Margins

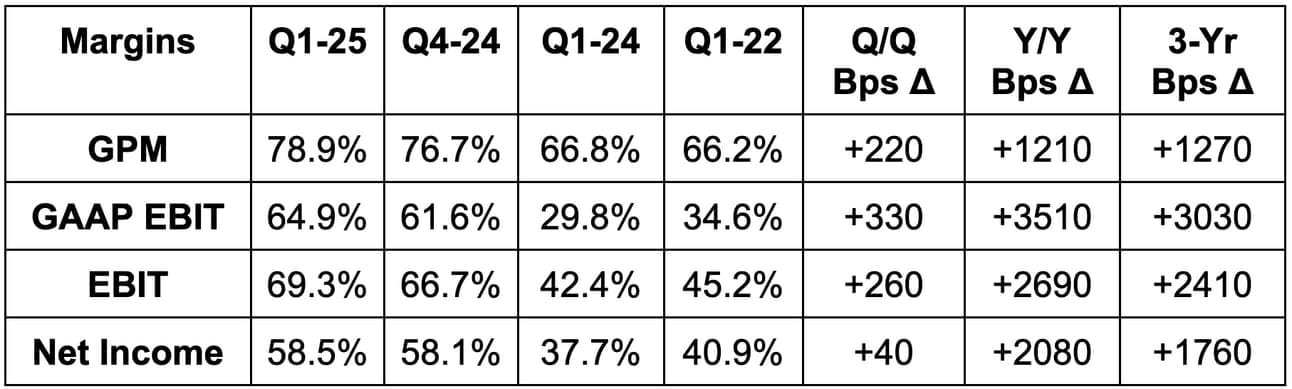

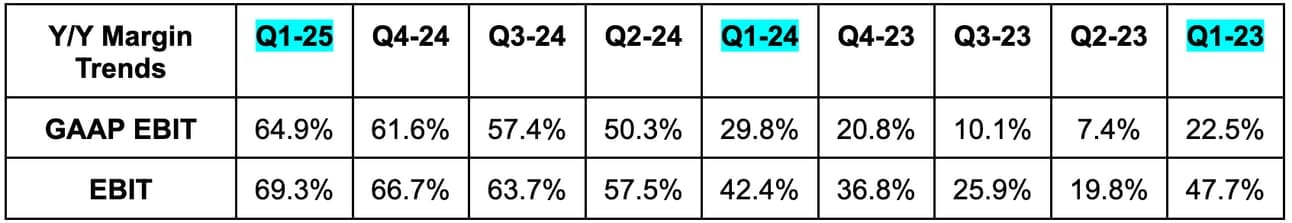

- Beat EBIT estimates by 10.8% & EBIT beat guidance by 12.9%.

- Beat $5.18 GAAP EPS estimates by $0.80 & beat GAAP EPS guidance by $0.96.

- GAAP and non-GAAP operating expense (OpEx) growth continued to lag revenue. Love it.

- Beat 77% gross profit margin (GPM) estimates and identical guidance by 190 basis points (bps; 1 basis point = 0.01%). Rapid Y/Y GPM leverage was helped considerably by lower inventory charges.

c. Balance Sheet

- $31.4B in cash & equivalents.

- $9.7B in debt.

- Share count is roughly flat Y/Y.

- Raised its quarterly dividend from $0.04 to $0.10.

- Announced a 10-1 stock split.

d. Q2 Guidance & Valuation

- Revenue guidance beat estimates by 4.9%.

- EBIT guidance beat estimates by 4.8%.

- Roughly $6.21 EPS guidance beat by $0.21. This assumes share count is flat Q/Q. Nvidia only gives gross margin, OpEx, non-operating income and tax guidance. So we have to assume share count for EPS.

- It sees 40%-43% Y/Y OpEx growth. This compares very nicely to 86% Y/Y revenue growth expected by sell-siders.

Nvidia trades for 36x forward EPS; EPS is set to grow by 95% Y/Y (probably closer to 100% Y/Y now). EPS is expected to grow by 26% Y/Y next year.

e. Call & Release

Data Center Demand:

As you can tell from the masterful results, demand for Nvidia’s GenAI infrastructure is off the charts. This can be directly seen in the data center portion of its business. Demand across all types of end customers and all geographies remains ahead of supply.