Table of Contents

1. AMD (AMD) – Earnings Review

If there’s one thing the semiconductor industry loves, it’s constantly changing the names of products with an alphabet soup of acronyms for us to juggle. Fun, fun. Those acronyms all fall into neat categories: chips, networking and connectivity, and software. It’s these ideas and AMD’s positioning within them that matter to investors. Not that they’ve memorized what an MI325 HBM3E chip stands for. That’s how we’ll frame this coverage, with an emphasis on data center results.

GPU: Graphics Processing Unit. This is an electronic circuit used to process information and data. The accelerated compute needed for GenAI apps and models pulls from next-gen GPUs. It thinks its “MI” series of GPUs (part of the “Instinct” product family) boasts best-in-class memory and bandwidth, which Nvidia would certainly disagree with. AMD also thinks its 2025 Instinct release will compete with Nvidia’s world-class Blackwell platform.

CPU: Central Processing Unit. This is a different type of electronic circuit that carries out assignments and data processing. CPUs fall in the general compute bucket. General compute CPUs are still optimal for static, step-series and instruction-based tasks. They’re also much cheaper than deploying next-gen GPUs when they can work for the specific use case. AMD’s new AI data center CPUs “extend leadership in performance per watt and dollar.” I

NPU: Neural Processing Unit: Used for AI-enabled personal computers (PCs).

TOPs: Tera Operations Per Second. This measures NPU performance, with more TOPs being better. AMD’s new Ryzen AI PC has 20% more TOPs than Microsoft’s best unit. To AMD, TOPs superiority is imperative for running Copilots and GenAI apps on PCs with optimal latency, hallucination rates and performance. It’s how the firm claims to be a “leader in AI inference on the PC side.”

a. Results

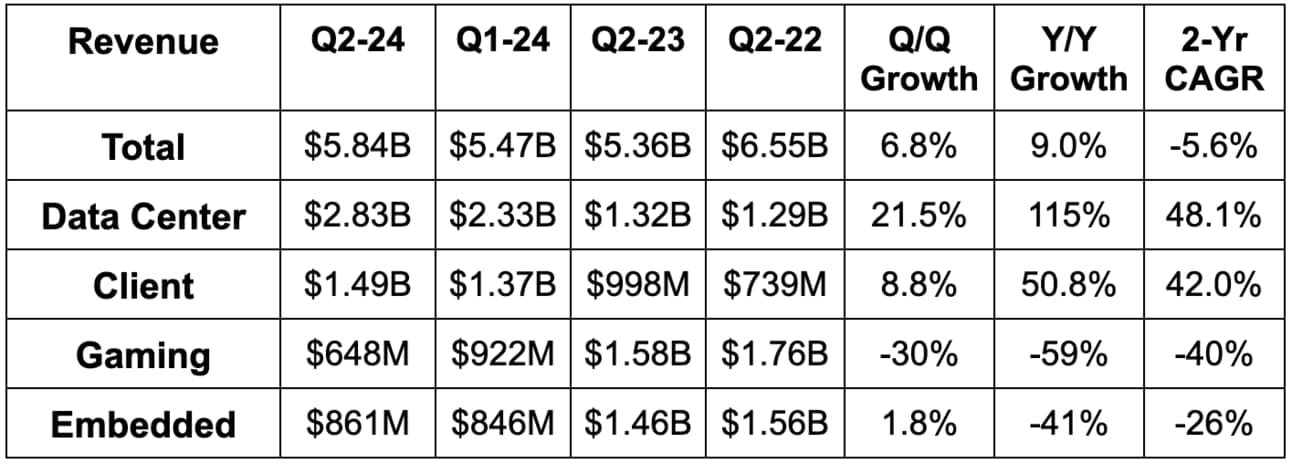

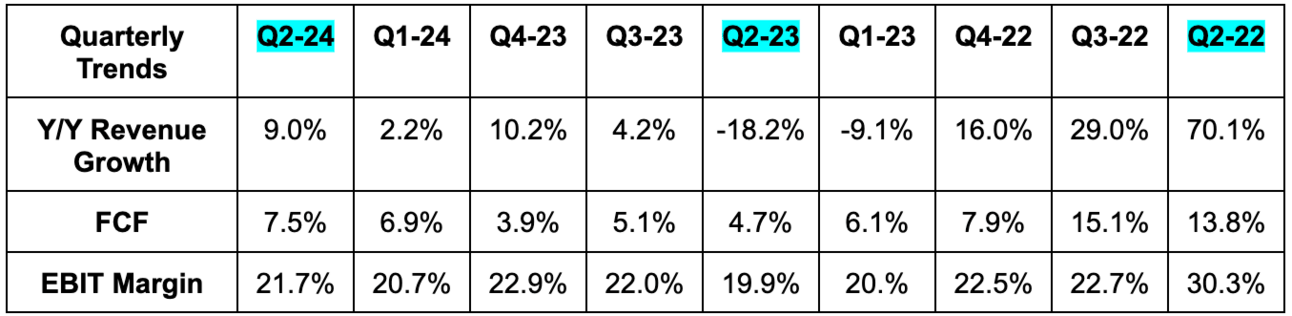

- AMD beat revenue estimates by 2.1% & beat guidance by 2.5%. The data center segment is where AMD’s progress in GenAI can be most clearly seen.

- Adobe, Boeing, Siemens and Uber were all cited as enterprise customer win highlights during the quarter.

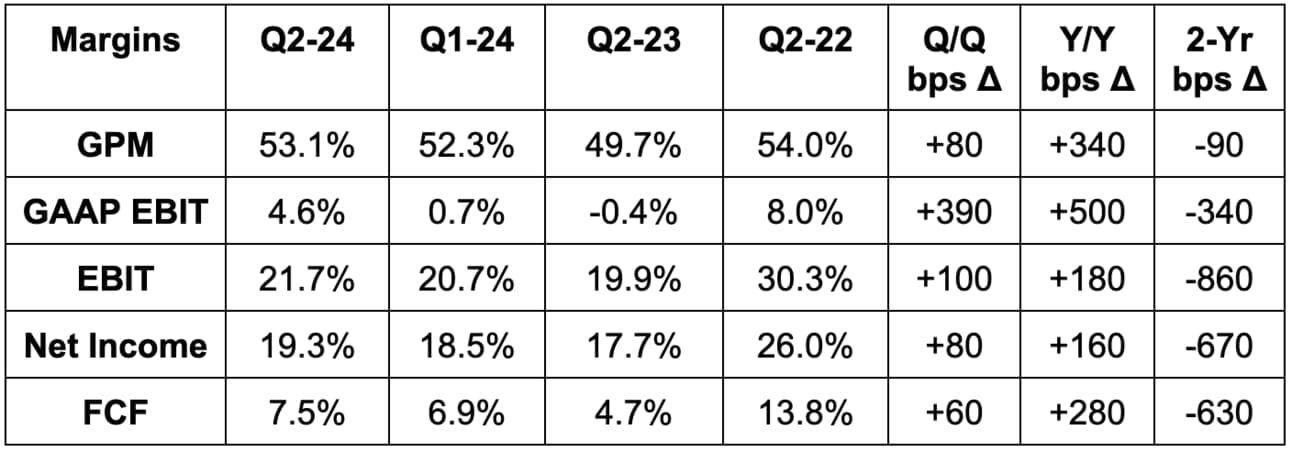

- Slightly beat GPM estimate & met GPM estimate.

- Beat EBIT estimates by 0.8%.

- Operating expenses rose 15% Y/Y due to R&D investments to support the need for rapid innovation.

- Met EPS estimate. EPS rose by 19% from $0.58 to $0.69 Y/Y.

- Slightly missed GAAP EPS estimate.

- Note that Xilinx M&A continues to heavily impact GAAP margins.

b. Balance Sheet

- $5.3B in cash & equivalents.

- $1.7B in total debt. Retired $750 million in debt during the quarter with its cash pile.

- Share count ~flat Y/Y.

c. Guidance & Valuation

Q3 revenue guidance beat by 1.5%, while its 53.5% GPM guide missed 54.0% estimates. Its EBIT guidance missed by 4.5%. It also raised its data center GPU annual revenue guidance from at least $4 billion to at least $4.5 billion.

AMD trades for 39x 2024 earnings. Earnings are expected to grow by 27% Y/Y this year and by 60% Y/Y next year. Here’s how a 39x earnings multiple compares to its historical norms:

d. Call

The GenAI Opportunity:

AMD credited its successful quarter to an acceleration in AI business revenue (seen in the data center category). Its traction within accelerated compute (Ryzen and MI processors) as well as networking technology is building.

In data center, GPUs, CPUs and networking are the three buckets to focus on. Its newest Instinct accelerators will be available in Q4 2024, with “leading memory and compute performance.” Its 2025 iteration of the Instinct GPUs will pull from a new architecture (called CDNA 4) to 35x the inference performance vs. its predecessor.

At this year’s Computex event, hyperscalers like Azure debuted more cloud instances using AMD Instinct accelerators; this means more of Azure’s hosted infrastructure for clients is running on AMD hardware. Microsoft is also using the firm’s MI chips (which combine GPUs and CPUs) for Copilot alongside AMD’s ROCm GPU software. ROCm offers a slew of tools and integrations to make using AMD’s chips across applications more convenient. Nvidia’s NVIDIA Inference Microservices (NIMs) and other software tools are seen as having a large edge in GenAI value proposition, so it’s good to see best-in-class cloud players embracing ROCm.

- The MI chip series crossed $1 billion in quarterly revenue.

- Hugging Face is using Azure instances powered by AMD.

- Its AI enterprise and cloud customer pipelines rose Q/Q.

- Several customers are using MI processors and its ROCm software in tandem with their Llama 3.1 (Meta open source model) work.

In PC, its newest Ryzen AI processors boast an industry-leading 50 TOPs for AI processing power. It will help run Windows Copilot+.

In networking, with the help of partners like Broadcom and Cisco, it’s piecing together best-in-class tools with its “Infinity Fabric” connectivity tech to emulate the vertically integrated offering that Nvidia has. The “Ultra Accelerator Link” is a group of companies using AMD’s networking tools to build more open, scalable and reliable GPU connections. It will create an “industry standard for connecting AI accelerators.” More connections mean better efficiency, better bandwidth and better client results.

Chip companies are always iterating and always improving… that is vital when Nvidia is moving as rapidly as it is. And speaking of moving quickly, AMD has committed to a 12-month pace of new GPU platform introductions to rival Nvidia’s “rhythm.” Its Instinct roadmap will stick to this annual pace. 2-3 years used to be a normal cadence for platform launches. Things just move more quickly today.

General Compute:

While GPUs get all of the GenAI hype, general compute CPUs are still quite relevant. And as GenAI raises over compute capacity needs, CPU demand will indirectly benefit in some areas. Wherever CPUs can still be a viable option, companies can realize material cost savings by deploying the cheaper technology. If they can get away with it without sacrificing product quality, they should. Partially thanks to this and an overall rebound in general compute demand, its newest Zen 5 CPU architecture built right into its newest EPYC CPU processors is thriving. It will soon release the 5th generation of its EPYC processors (called Turin). This is set to “extend AMD’s total cost of ownership (TCO) leadership with shipments already starting this past quarter.

AMD EPYC cloud instances offered from hyperscalers rose 34% Y/Y. Instances simply refer to available servers hosted by a hyperscaler like AWS or Azure and powered by AMD processors. These EPYC instances are also in high demand, as companies like Netflix and Uber are using them to power “customer facing, mission-critical workloads.” Oracle’s “HeatWave” offering, which helps customers with accelerated compute and GenAI transformations, is powered by the 4th gen EPYC processors.

“We saw positive demand signals for general-purpose compute in both our client and server processor businesses.”

CEO Lisa Su

Segment Performance:

Data center was driven by a “steep ramp” of Instinct GPU shipments, which also drove considerable operating leverage. EBIT margin was 26% vs. 11% Y/Y.

Client revenue growth was thanks to Ryzen processor sales. This also drove fixed cost leverage and EBIT margin expansion for it. EBIT margin was 6% vs. -7% Y/Y.

In gaming, revenue tanked due to a tough environment for semi-custom chip revenue. This led to EBIT margin falling from 14% to 12%. Struggles here will likely continue for the hyper-cyclical revenue segment.

In Embedded, revenue tanked due to continued inventory resets from customers. These are designed for specific apps with less intensive, specialized compute when compared to general purpose CPUs. This led to EBIT margin falling from 52% to 40%. It is “seeing early signs of order patterns improving and expect this segment to gradually recover during the second half of the year.”

M&A:

AMD announced Silo AI as its 3rd recent AI-related acquisition last week. It has also invested another $125 million across 12 other AI companies. Silo AI Europe’s largest private AI lab and comes with a deep bench of talent to “extend AMD’s capability to service large enterprise customers looking to optimize AI solutions for AMD hardware.” It sounds like this will be an upgrade to its ROCm software. This should make working with AMD on GPU performance optimization, maximizing connectivity, allocating compute and ensuring consistent up-time of that compute power. It could also help AMD build needed application programming interfaces (APIs) to make it easier to service customers using Nvidia GPUs. Nvidia has done a wonderful job of building large reliance on its software suite. That’s partially due to how well the products work and also due to some vendor lock. It’s a lot easier to use Nvidia software with those chips than any other software. This will ideally help in that area.

e. Take

We’ve heard from pretty much every hyper-scaler this quarter that customers are pushing back against the absurd pricing power that Nvidia commands. Sure, Nvidia’s tech lead warrants that pricing power for now, but many firms are scrambling to try to emulate Nvidia’s best-in-class tech at a more modest price tag. I don’t think any of them will catch up any time soon, but two additional notes here:

- AMD probably has the best chance (even compared to hyper-scalers).

- They don’t need to catch up.

It doesn’t need a single chip to be as powerful as Nvidia’s Blackwell (or 2026 Rubin) project. It needs to provide bundles of chips (for likely less margin) to emulate those next-gen products. And it needs strong networking tech partnerships to make sure the processors work well together. It seems to have those ingredients in place. In an overly simplistic way of thinking, it needs 5 Instinct GPUs to cost less than 1 Blackwell GPU and to do as much as that single GPU can do. That’s how it can compete in the near term in accelerated compute GPUs, and the beginnings of its data center explosion point to it making headway.

This is a phenomenal company. Lisa Su is a fantastic CEO. She supplanted a deeply entrenched Intel across PCs and general compute data centers. Still, semiconductors are cyclical and this GenAI cycle will not last forever. The bear vs. bull debate centers around the “how long” rather than if this sector is no longer cyclical… it is. With that said, commentary from mega caps does point to demand not slowing down near term and this data center result shows you that AMD is capable of taking advantage.

2. Palantir (PLTR) — Earnings Review

Palantir 101:

Palantir is a software company that helps customers get the most out of their structured and unstructured data. Like many others, it pulls from years of AI/ML work to automate insight-gleaning. It utilizes complex neural networks to power anomaly detection, trend forecasting and natural language processing too. Overall, it frees clients to conjoin disparate data sources while utilizing its software to uncover ideas that manual analytics and legacy competition cannot derive. It gives customers a birds-eye view of their operations, with detailed suggestions to help optimize products and workflows.

Revenue is neatly split into two buckets – “government” and “commercial.” Government clients predominantly use its Gotham product platform, while commercial clients mainly use its Foundry product platform. With Gotham, Palantir routinely builds custom use cases for individual government clients. Foundry was built to be more malleable, with far more pre-built app integrations available. That diminishes the need to conduct custom builds for every single private enterprise. It still does a lot more custom building than a typical enterprise software firm will.

It has also seamlessly leveraged the commercial platform to cater to industry-specific needs. By-industry large language models (LLMs) are intuitively named “micro-models.” These boast sector-specific use cases with granular, relevant regulatory compliance help. A financial services model from Palantir, for example, may specialize in assessing credit risk or fraud detection.

Palantir Apollo is its software suite, which provides continuous integration and continuous delivery (CI/CD) to automate software package building and deployment. It’s a foundational piece of the firm’s ability to collect, utilize and drive value from broad data ingestion. Apollo ties very closely into Foundry and Gotham as a software enabler for both platforms.